Multimodality as Supervision.

The sensed data in a deployment environment is often multimodal, which, besides RGB images, can contain various modalities, such as depth, motion sensing, surface normals, tactile, etc.

This enables Cross-Modal learning, i.e., predicting the response of one sensor from another, as a self-supervised method for learning a representation.

We leverage this concept to learn a rich joint representation for the test space in a self-supervised with minimal reliance on external data.

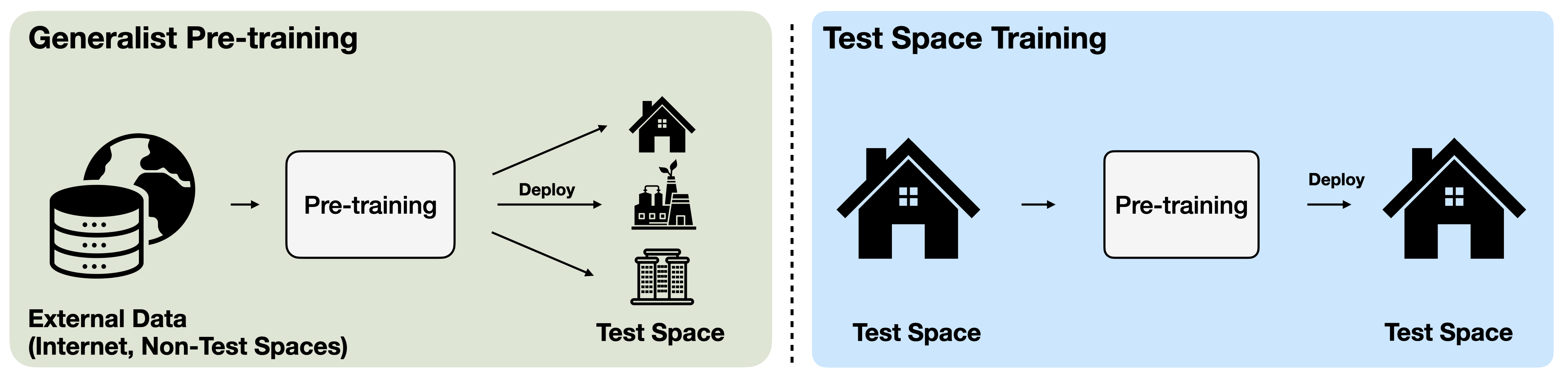

A standard approach to train and deploy vision models in a desired test space is generalist pre-training.

It uses external data, such as images from the Internet or other spaces similar to the test one.

As an alternative, we study multimodal Test-Space Training, which performs self-supervised pre-training on unlabeled multimodal data collected in the test space.

This leads to a model specialized to that space with SOTA performance with only the data from the test space.

We evaluate this approach on several downstream tasks~(semantic segmentation, here) and show that test-space specialists compares favorably against strong generalist pre-training baselines,

including those trained on large-scale Internet-based datasets or many other external spaces.

Summary

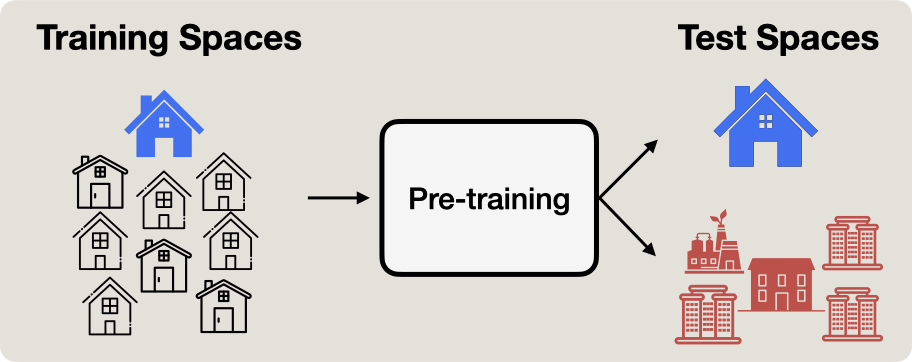

The common approach for developing a vision model is generalism, which involves training on a large diverse dataset to cover the varied deployment environments and leads to a model that is expected to work everywhere.

However, many practical applications operate in a specific test space, e.g., a robot deployed in a single house, and do not necessarily need to generalize to novel environments.

In this work, we explore whether we can use rich multimodal data only from the test environment to pre-train a performant representation using self-supervised learning.

We find that this approach, with no external access, can achieve competitive results with generalist models (e.g. CLIP and DINOv2), pre-trained on large-scale internet datasets.

Additionally, we can further improve performance by scaling modalities by leveraging off-the-shelf model outputs in the test space, outperform various internet based generalists and task-specialist models.

We study the effectiveness of this approach by evaluating the models on various datasets and downstream tasks, such as semantic segmentation, captioning, and object detection, as well as a set of ablations and analyses to extract insights.

This approach raises intriguing points on substituting data with (multi)modality, enabling an alternative scenario where the need for external Internet-scale datasets for pre-training models is reduced.

It also shows that merely benefiting from test-space data was insufficient for achieving competitive results, and multimodality was key for that purpose.

Overview

Introduction

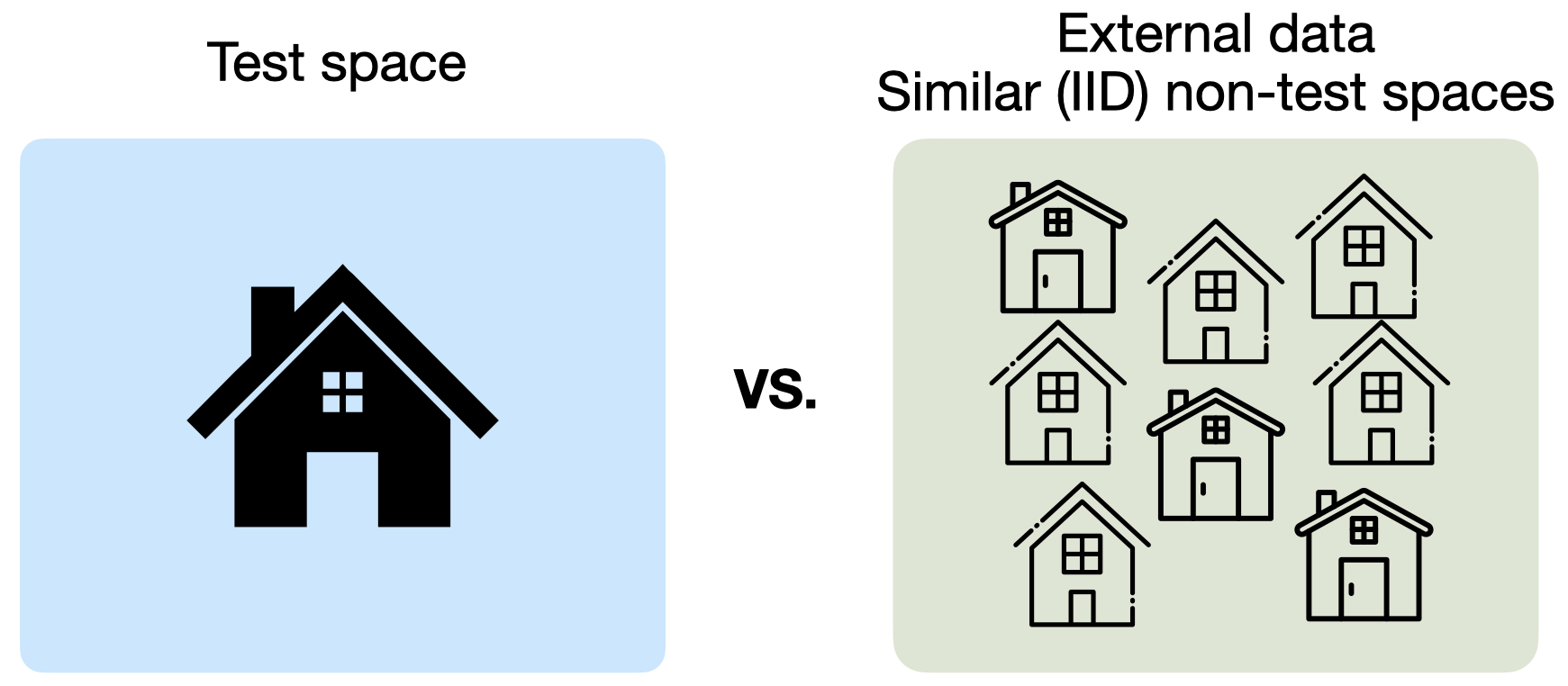

Figure 1. Vision Models on many practical applications, are required to operate within a certain test space, for instance, a user's home.

These scenarios can significantly benefit from using specialist models explicitly optimized for the particular test space, regardless of their generalization performance elsewhere.

Consider this, you wish to deploy an interactive AI assistant at your home. This assistant is equipped with a vision model.

This vision model needs to understand the visual properties of your home, or as we call it, the test space.

In many similar practical scenarios, such as household robotics, augmented reality, and interactive home assistants, computer vision systems need to operate in unique environments, like a user's living space.

A natural question to ask is: how can we built performant vision models for this test space?

Figure 2. In this work, we study whether we can build performant models for the test space, by just collecting modality-rich data in just the test space,

as opposed to the de-facto approach of using large-scale external data, like the internet. Note that such modality rich data collection is infeasible, due to the additional

cost and unavailability of such sensory data acquisition over billions of images from disconnected external spaces.

The de-facto approach to such applications is to use generalist models, trained on large-scale internet based datasets.

These models, are generally trained once, and then the same model is deployed across various spaces, relying on their generalization ability to perform well in the test space.

In this work, we show that we do not always need large scale external datasets like the internet, to build performant models for such applications.

We show that we can built state-of-the-art models for the test space, by leveraging multimodality and using only

unlabeled data from the test space of interest.

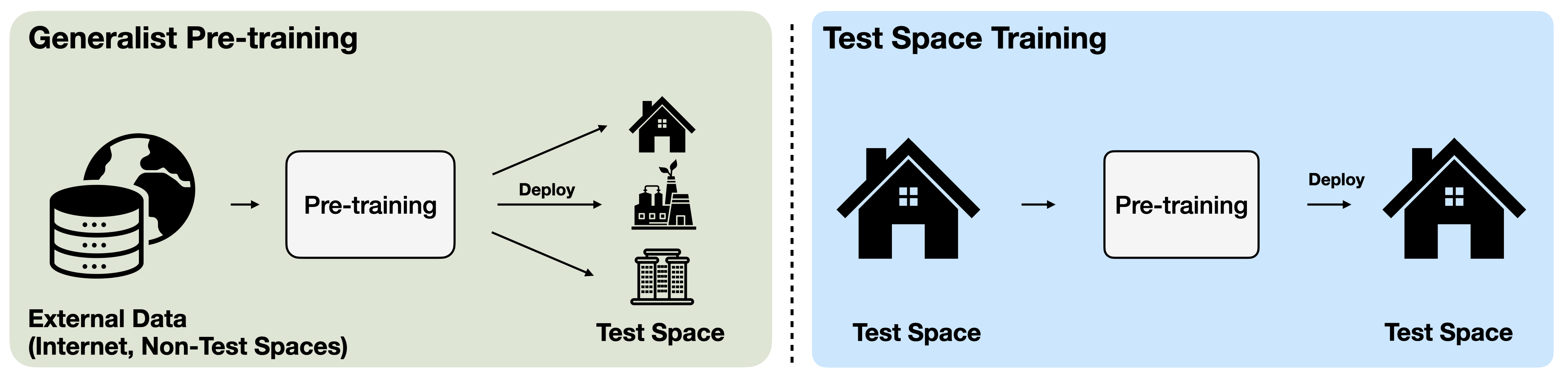

Figure 3. Generalist models (left), which are pre-trained once, on large-scale internet based datasets, and then deployed to various downstream spaces.

Test-space training (right), contrary to generalists, pre-trains the models on unlabelled data from the test space itself.

We show that test-space training, results in specialist models that outperform generalist models, when evaluated on the test space.

To this end, we develop Test-Space Training (TST), a framework that enables pre-training specialist models tailored to a test space.

TST leverages access to the test space, which is common for many practical applications, such as household robotics, augmented reality, and interactive home assistants.

It collects unlabeled pre-training data from the test space for self-supervised learning, as opposed to using external datasets like the Internet.

Importantly, directly collecting data in the space enables rich multimodal data collection via any user device, e.g. a household robot or a mobile phone.

Figure 4. Various platforms like a household robot, or simply a user's mobile phone are often equipped with a rich set of sensors, which can provide multimodal data beyond RGB images, like depth maps and surface normals.

Figure 5. Cross Modal Learning. Predicting the response of one sensor from another has been shown to be a strong signal to learn representations of the world. Each sensor represents a different representation of the same underlying physical world.

Next, to perform self-supervised learning on this data, we draw inspiration from findings in developmental psychology that suggest cross-modal learning,

(Fig. 6), is a potent signal for learning representations of the physical world.

We implement this cross-modal learning with multimodal masked modeling as our pre-training objective, leading to TST-MM.

We find that this multimodal pre-training outperforms the unimodal one, with more modalities consistently leading to better performance.

Figure 6. We show that using, rich multimodal data collection, in the test space itself, leads to specialist models, that outperform generalist models, trained on billion-scale internet images.

Our specialist models, pre-trained with cross-modal learning, leverage multimodality as a strong self-supervision signal, leading to better performance in the test space.

Method Overview

Multimodal, Test-Space Training (TST) is a framework designed to develop highly performant, specialized model to the test space. Instead of relying on large-scale internet datasets,

TST collects multimodal pre-training data in the test space itself, and performs self-supervised learning on it. The method consists of the following steps:

1. Data Collection: We collect pre-training data from the test space, by placing a camera at various points to cover the space densely.

In addition to RGB frames, we collect data from various sensors and modalities available in the test space, such as 3D data using a depth sensor.

We can optionally process this data to create additional modalities using off-the-shelf pseudolabel networks.

This results in an aligned multimodal dataset that we use for pre-training.

2. Self-Supervised Pre-training: We employ self-supervised learning to pre-train a task-agnostic, representation learning model on the multimodal data from the test space.

We explore different self-supervised learning objectives, masked modelling (MAE, 4M) and contrastive learning (DINOv2).

Among these, we found multimodal masked modelling to be the most effective for setup. We refer to this multimodal variant as TST-MM.

3. Transfer Learning: We evaluate the effectiveness of the pre-trained model by performing transfer learning evaluations.

For this stage, we assume access to a small external dataset, with task-specific annotations, e.g., semantic segmentation masks or image captions.

We add a task-specific head to the pre-trained model and finetune it on this external dataset.

Importantly, we do not have access to any task-specific annotations from the test space itself.

We benchmark against various off-the-shelf vision models, by finetuning them on the same transfer data.

4. Deployment & Evaluation: The fine-tuned model is deployed in the same test space where it was pre-trained. We benchmark against various internet pre-trained generalists, and task-specific models.

We evalute on three vision-based tasks, namely, semantic segmentation, object detection, and image captioning.

We show that TST-MM outperforms all baselines, including those trained on large-scale internet datasets

or many similar spaces to the test space.

Test-Space Training vs. Internet-based methods

We compare Test-space Training, specifically, our TST-MM model against various internet-based methods.

We compare against generalist models, trained on large-scale datasets, such as CLIP, DINOv2, and 4M.

Addtionally, we also compare against task-specific models, such as Mask2Former for semantic segmentation, ViTDet for object detection.

Please refer to the paper for more results on other datasets and tasks.

Semantic Segmentation

We compare against generalist models like CLIP, DINOv2, 4M trained on large-scale internet datasets, and show that TST-MM outperforms them on various downstream tasks.

This suggests that we can outperform large-scale generalist models trained on millions of images from the Internet by using data from the test space.

We present qualitative video evaluations on semantic segmentation, on Scannet++ and Replica datasets.

We also present a comparison against Mask2Former, an off-the-shelf task specialist model for semantic segmentation.

Note how TST-MM predictions are notably more consistent across various viewpoints, showing the value of having access to the test space during pre-training.

Quantitative Results

We present quantitative comparison on semantic segmentation, object detection, and image captioning tasks. We use Scannet++, Replica, and ProcTHOR datasets. We find that TST-MM outperforms or is on par with all internet-based models, including self-supervised generalists trained on large-scale internet datasets or task specialist models.

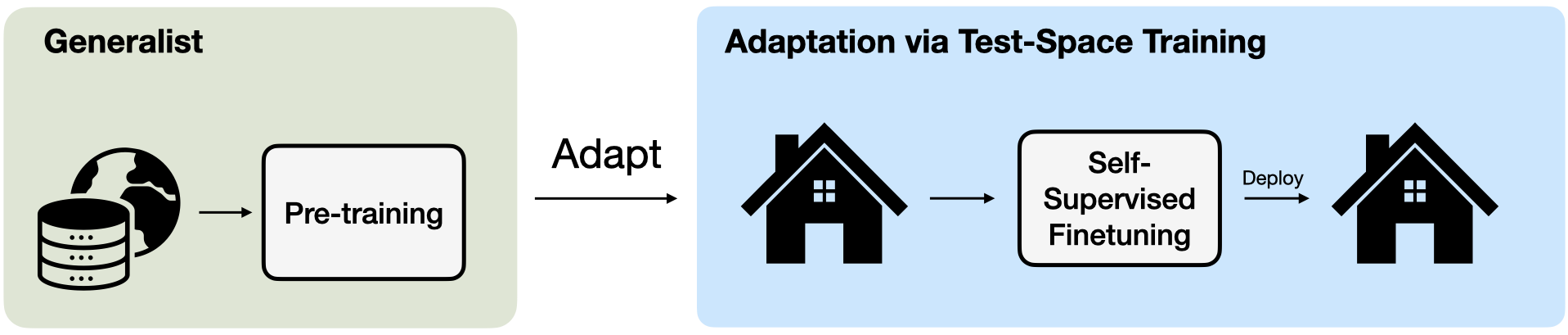

Adaptation

For all the results until this point, we start from scratch and train the model from random initialization. However, TST can also serve as an adaptation mechanism for existing generalist models, making them more performant in the test space.

Here, we start from a pre-trained 4M-21 model and fine-tune it on data from the test space, using the multimodal masked modeling objective of TST-MM.

We find that the resulting model, TST-MM (adapted), significantly improves the performance over 4M-21 in the test space.

Ablations and Analysis

I. How far can we go with just access to the test space?

We first explore if we can substitute a large-scale Internet-based dataset with just physical access to the test space for collecting pre-training data.

To probe this, we pre-train a model with multimodal masked modeling using only sensory modalities (TST-MM, Sensors only) collected from the test space.

Here, we refer to RGB images, Depth maps, Surface Normals, and Canny Edges as sensory modalities, as they can be directly collected using hardware sensors,

or are simple mathematical transformations over them.

This model has no access to the external world whatsoever, for data or pseudolabelling networks to create additional modalities.

We find that that pre-training with multimodal masked modeling using only sensory data

collected from the test space outperforms pre-training on millions of frames collected from the Internet.

Note that

We find that that pre-training with multimodal masked modeling using only sensory data

collected from the test space outperforms pre-training on millions of frames collected from the Internet.

Note that the Internet baseline, 4M-21,

also benefited from training on pseudo-labels provided by strong off-the-shelf models,

such as CLIP,

DINOv2,

Imagebind,

and SAM,

yet it still falls behind pre-training on sensory data collected directly in the test space.

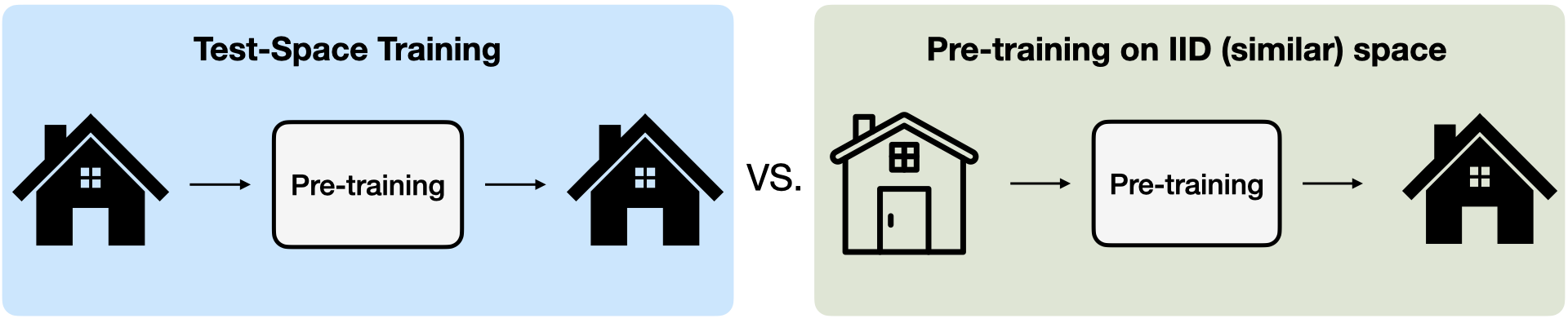

II. Does it specialize to the test space?

The goal of test-space training is to learn a specialized vision model for a given space.

In this analysis, we attempt to measure this specialization using cross house evaluations.

Specifically, we ask the question, do we really need access to the test space itself, or we can substitute it with a similar space?

Note that with similar space, we mean a space that is similar in terms of the layout and furniture appearance, but not necessarily the same.

We consider 3 spaces.

We pre-train a model in each space, and evaluate it in all 3 space.

As can be seen in the figure below, performance is highest along the diagonal—where pre-training and evaluation

occur within the same space—showing the value of test-space specialization.

III. Specialization-Generalization Tradeoff

We observed that the best performance on a given test space is achieved when pre-trained on the same space.

However, we would expect this specialized model to not generalize well to new houses.

Can we keep (or improve) this specialization performance while gaining generalization capabilities by adding

more houses during pre-training in addition to the test house?

As the plot above shows, as we add more houses during pre-training, the performance on the held-out new houses increases, as expected.

However, the performance on the original test space drops compared to the specialist single-space pre-training,

demonstrating a specialization-generalization trade-off of the pre-trained model.

IV. How much external data is 1 test space worth?

We find that pre-training on the test space is important for specialization, and cannot be substituted by a similar space.

However, we take this one step further, and ask, can we substitute test-space data with data from many similar spaces? How many spaces would we need?

We use ProcTHOR to generate a

large number of similar houses (IID to the test space) and pre-train models using an increasing number of them.

The curve below shows the performance of each model on the test space not seen

during pre-training compared to pre-training on the corresponding test space.

We find that even thousands of similar spaces are not enough to substitute pre-training on the exact same space that we deploy in.

V. Scaling data vs Scaling modalities

We study the trade-off between using smaller-scale but modality-rich test-space data, versus large-scale unimodal external data (RGB-only).

Starting from unimodal pre-training within the test space, as the plot below shows, scaling data via additional modalities (scaling modalities) yields significantly better performance than increasing the amount of unimodal data from external sources (scaling data).

This suggests that, for building high-performing models in a specific test space, collecting data within that space using a richer set of modalities is more effective than relying on large-scale, unimodal data collected from external sources.

VI. Is a single modality doing the job?

TST-MM employs cross-modal learning, using various modalities to pre-train a performant specialist model in the test space.

However, to understand whether a single modality is contributing to most of the improvement, overall a unimodal model, we conduct a modality-scaling analysis.

We plot a curve: starting from an RGB-only model on the left and ending with TST-MM (9 modalities) on the right,

we add one modality at a time and average results over eight random set of modalities, for each modality count.

Regardless of which modalities are chosen, performance steadily improves as we include more—demonstrating that simply increasing modality count, rather than hand-picking them, boosts performance

and that TST-MM’s gains arise from their collective interplay, ie multimodality.

Contribution of different modalities to TST performance.

We study the effect of each modality on TST by doing a drop one combinations from TST-MM, and add one to TST-MAE (RGB-only TST).

We use the ViT-S backbone. We find that even though some modalities provide higher gains than others when added to the RGB-only TST-MAE,

the performance of TST-MM stays relatively stable, agnostic to the choice of the dropped modality.

VII. TST with other pre-training objectives

In addition to multimodal masked modelling, TST also supports other self-supervised pre-training objectives.

Similar to TST-MM, popular objectives like MAE and DINOv2, also show specialization trends in the cross house evaluations.

Additionally, we compare the performance of different pre-training objectives under TST on semantic segmentation.

We find that, multimodal masked modelling is the best pre-training objective for our setup.

Conclusion & future work

We introduce test-space training (TST), a framework for pre-training specialist vision systems tailored directly to a target test environment.

Unlike the conventional approach of pre-training general-purpose models on large-scale Internet datasets, TST leverages direct physical access to the test space.

This access allows us to densely sample pre-training data and incorporate multiple sensory and off-the-shelf modalities, facilitating multimodal pre-training.

Experimental results show that TST leads to strong performance in the test space for several downstream tasks, outperforming off-the-shelf generalist and task-specific baselines.

Below, we discuss some prospective future directions for Test-Space Training.

-

Data Collection Policy. TST relies on data sampled from a test space for pre-training.

This sampling is currently agnostic of the pre-training objective.

Closing the perception-action loop and connecting data collection to pre-training can potentially further strengthen specialization.

Prior work like SEAL, Interactron has explored learning data collection policies for task-specific models.

We plan to explore this in the future for task-agnostic self-supervised representations.

-

Pretraining Objective. The objectives we explored in this work are mostly tailored toward large-scale Internet data.

Having physical access to the test space can benefit from specific objectives enforcing inductive biases that come with a given test space,

like viewpoint

and cross-modality consistency constraints,

as well as other temporal/video modeling objectives.

-

Incorporating physical modalities. We shows that modalities from onboard physical sensors can be an effective source of self-supervision for pre-training in the test.

Our goal in this paper was developing the basic framework for TST.

Expanding this set to other hardware-based sensors such as audio,

motion, proprioception and touch

can provide an additional signal for pre-training.

Citation

@article{singh2025tst,

title={Multimodality as Supervision: Self-Supervised Specialization to the Test Environment via Multimodality

},

author={Kunal Pratap Singh and Ali Garjani and Muhammad Uzair Khattak and Rishubh Singh and Jason Toskov and Andrei Atanov and O{\u{g}}uzhan Fatih Kar and Amir Zamir},

journal={arxiv},

year={2025}

}